We are passionate about the work we do, which is why we regularly research and update our blog with original content to keep you updated with industry news.

Date Friday, 27 October 2023 Charlotte Abrahams , In: Technical

Where artificial intelligence and machine learning was once seen as the villainous plot-point for futuristic action movies, we now live in a world where AI is becoming more and more integrated with our everyday lives. From ChatGPT to Midjourney, many of us are now familiar with a variety of AI tools targeted at making our everyday lives easier, especially for businesses and working professionals.

Businesses are beginning to integrate AI with everything from marketing materials to internal operations – but what are the risks that come with this? How safe is AI, really?

In this article we will cover some of the main risks that could come with integrating AI into your business, and will discuss a few ways to make sure you’re using AI responsibly and ethically.

Using any online tool comes with a certain level of risk. AI, however, has an extra layer of risk because it learns from the information you, and other internet users, give it. Whilst this active machine learning is one of the draws of using AI, the lack of human cognition that comes with AI may lead to several costly risks for your business.

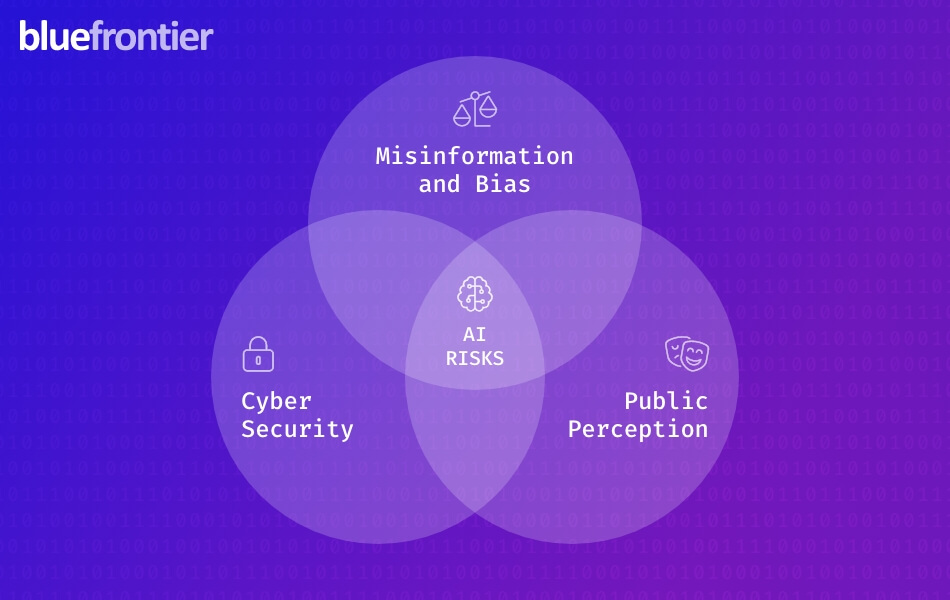

The primary risks that come from using AI can be broken down into 3 main areas:

AI has been responsible for several cyber security breaches suffered by large companies in 2023 – including a ChatGPT cyber security breach for Samsung. Whilst AI itself may work as a tool to aid malicious hackers and their operations, using AI without security in mind could quickly lead to a data breach for your company.

Many businesses have started to rely on AI language models, such as ChatGPT, to help write their website content, ad content, and coding. When giving AI a brief to work from, it is tempting to supply as much information as possible to allow the AI to give you a detailed first result that will require less (if any) human editing. The problem with this is that any sensitive company information put into an AI language model brief becomes accessible to cyber criminals.

At best, this may mean an unexpected leak on new products or service offerings, but at worst this could give hackers the information they need to action a complete system breach.

Although more and more people are beginning to use AI and view it in a positive way, the public perception on AI is still somewhat negative due to concerns about how it is used. This is especially true in the Healthcare, Government, and Public Service sectors.

Businesses who actively use AI, but are transparent about it and thoughtful in how it is used, tend to have better public sentiment. On the other hand, businesses who attempt to hide their use of AI, or produce online content that does not feel human-like, are typically seen as less-trustworthy. So, to avoid a public perception disaster for your business, it is vital that any use of AI is carried out responsibly, openly, and ethically. Even more so, it is important to understand the limitations of AI and when it is necessary for a human to step in.

Being accurate in the information you publish is important for every business – not only to make sure you are supplying your customers with correct facts and advice, but to also meet Google’s requirements for helpful ranking content.

Although you may think AI-generated content will be factual due to its’ machine learning, it is important to understand that AI learns based on all information across the internet as well as the information directly given to it. Critically, almost 62% of the information on the internet is unreliable, which means that the majority of information AI learns from may be incorrect.

As well as this, AI will learn from the biases and views of the people who use it. For this reason, AI cannot be trusted to supply unbiased information, and by using it you may inadvertently run the risk of publishing content that is discriminatory or harmful to particular groups.

While many AI tools use continued machine learning to build ever-stronger ‘human likeness’, AI is fundamentally non-human. Whilst this does not mean that AI is ‘evil’ or ‘anti-human’, despite what Hollywood may have us believe, it does mean that AI lacks the human cognitive capacity to understand responsibility and ethics.

That means it’s up to us humans to make sure we use AI in morally regulated ways.

To address the risks associated with using AI, and to ensure your operations, security, or public sentiment are not compromised, it’s important to think about the following things every time you use AI for your business.

AI systems should be transparent in their decision-making processes – do you understand how the tool you’re using works and how the system has reached its conclusion? In addition to this, are you being transparent internally and/or externally about your use of AI? Are there clear reasons for using AI over humans that have led to your decision?

If you regularly use AI within your business, then consider having an AI Statement included within the Policies section of your website. For any published content that was aided by AI, then consider having a transparency statement at the end.

AI systems should treat users equally, without discrimination towards any individual or group – is the tool you’re using fair? It is important that you make sure the results produced by the AI system are fair and unbiased, which can usually only be achieved by human moderation and a strict editing process.

You should also consider if it is fair of you to use AI in each instance – will it produce the required outcome that your audience or colleagues deserve, or are you using it to cut corners?

The AI system you use should be accountable for its actions. There should be mechanisms in place to trace the decisions made by the AI system back to the data it was trained on. Does the tool you’re using have this level of traceable accountability available?

Before using or publishing AI-generated results, you should also be accountable for fact-checking and moderating content to avoid spreading false information. Are you able to ensure that the content you publish is completely factual, and correct? Are you willing to be held accountable if it is not?

Does the AI system you’re using scrape ‘publicly available’ data from questionable sources? Does it require any personal data to sign up or use the tool? Is there small print to say that the data you input will contribute to the tool’s learning capabilities or improve the AI’s services?

If the answer to any of these questions is ‘yes’, then you must be extremely cautious about the information you provide to reduce the risk of a privacy breach. At the very least, be sure to omit any brand mentions in your brief so that the information you give is harder to link back to your business.

Now you know what the main risks associated with using AI for your business are, and what you should think about before using an AI tool. But the world of AI is a complex one, with plenty of benefits as well as trip hazards that you must be aware of. So, what are our top 5 takeaway tips to help you navigate using AI responsibly, ethically, and safely for your business?

We hope you’ve learned something about using AI responsibly and ethically. If you are worried about the risks of AI, or have questions about how it can be implemented with your business, then get in touch.

*Exclaimer – this article was written by humans.

Digital Content Strategy Manager

Charlotte is passionate about all things media. Highly creative but also analytical, Charlotte loves to create original and exciting campaigns that make use of innovative marketing technologies and trends to drive KPIs. As an avid content writer, Charlotte loves nothing more than sitting down to write a blog post or web page on a weekday afternoon. Now certified in Marketing and Brand Strategy, Charlotte's days are often filled by coordinating holistic marketing strategies and brand audits for clients, working with team members across Freebook Global Technologies to deliver everything from market research to website launches.

ISO 27001

ISO 27001 ISO 9001

ISO 9001 ISO 13485

ISO 13485 ISO 14001

ISO 14001